Qwen 团队新作:统一视角解读 Transformer 中的 Attention 与 Residual Sinks

论文标题:A Unified View of Attention and Residual Sinks: Outlier-Driven Rescalin

...

Less is Enough——在大模型特征空间中合成多样化数据

论文标题:Less is Enough: Synthesizing Diverse Data in Feature Space of LLMs

论文

...

腾讯混元提出 G-OPD:超越教师模型的广义在线蒸馏与奖励外推

论文标题:Learning beyond Teacher: Generalized On-Policy Distillation with Reward

...

腾讯混元提出 Composition-RL:通过合成可验证Prompt提升大模型强化学习效率

论文标题:Composition-RL: Compose Your Verifiable Prompts for Reinforcement Learn

...

上交 & 千问提出 OPUS:论大模型预训练中数据选择与优化器几何的对齐

论文标题:OPUS: Towards Efficient and Principled Data Selection in Large Language

...

大语言模型强化微调中的熵动力学分析

论文标题:On the Entropy Dynamics in Reinforcement Fine-Tuning of Large Language

...

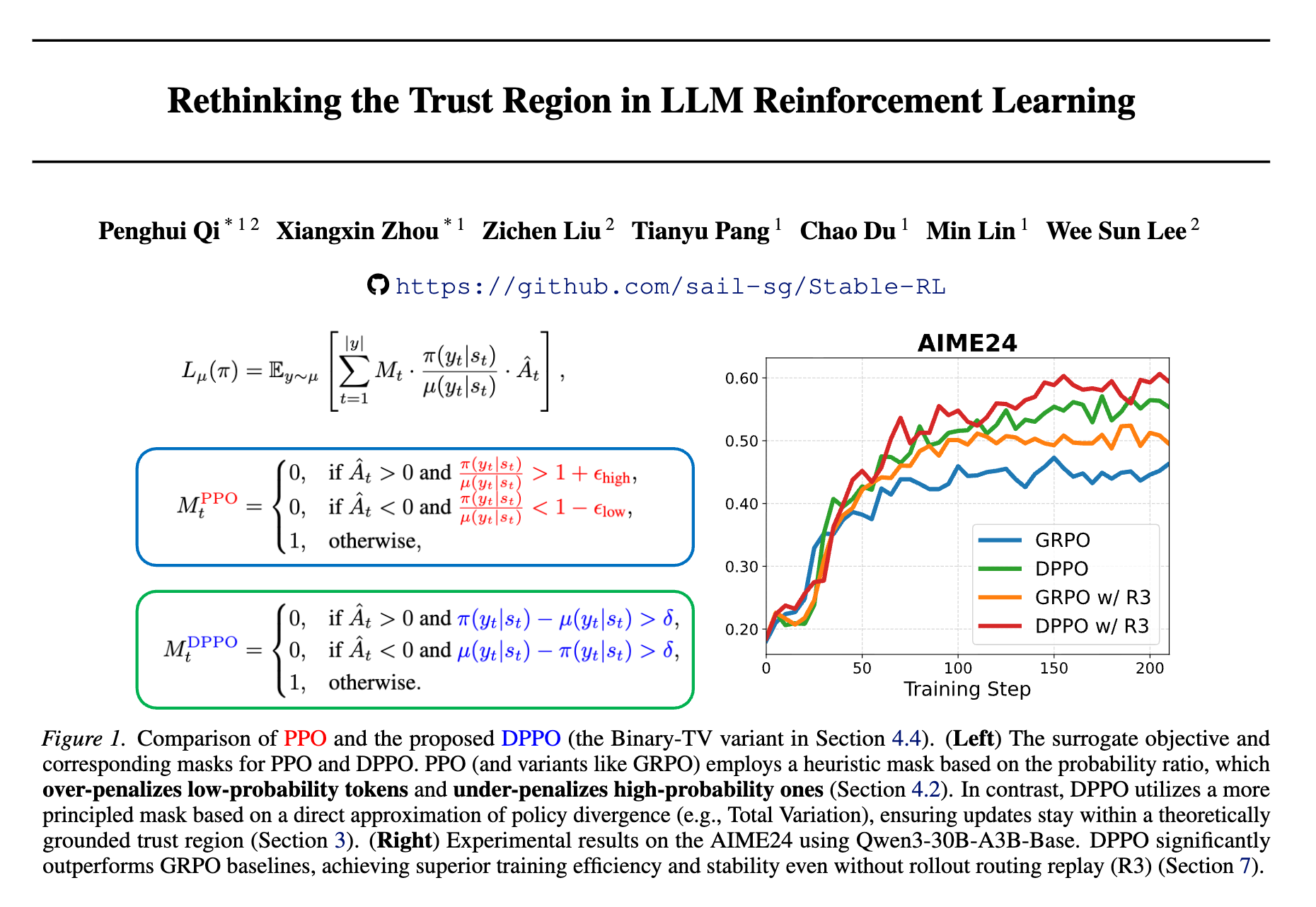

Sea AI Lab 提出 DPPO:重新审视 PPO 算法中的信任域

论文标题:Rethinking the Trust Region in LLM Reinforcement Learning

论文链接:https:

...

MaxRL:重新审视强化学习与最大似然估计的统一

TL;DR

在基于采样的推理任务(如数学解题、代码生成)中,强化学习(RL)通常被视为一种针对不可微优化的解决方案。然而,来自 CMU、清华大学、UC Be

...

Agentic RL 的新范式:在强化学习循环中融合规则、模型奖励与自然语言批评

论文标题:Exploring Reasoning Reward Model for Agents

论文链接:https://arxiv.org/pd

...

自蒸馏优化 SDPO:如何利用富文本反馈打破 RLVR 的信用分配瓶颈?

论文标题:Reinforcement Learning via Self-Distillation

论文链接:https://arxiv.org/p

...